So You Get the Need for Performance – but How Do You Improve It?

There are some eyeopening metrics out there about the correlation between performance and revenue. It has been 12 years since Amazon published their research that 100ms of delay cost them 1% in sales. And in 2013 Intuit published how each second of improved load time from 15 down to 7 seconds increased conversions by 3%. And at 7 to 5 seconds they saw 2% increase and the last 4 to 2 seconds made for an addition 1% increase per second of improvement. It adds up quite substantially how performance impacts revenue.

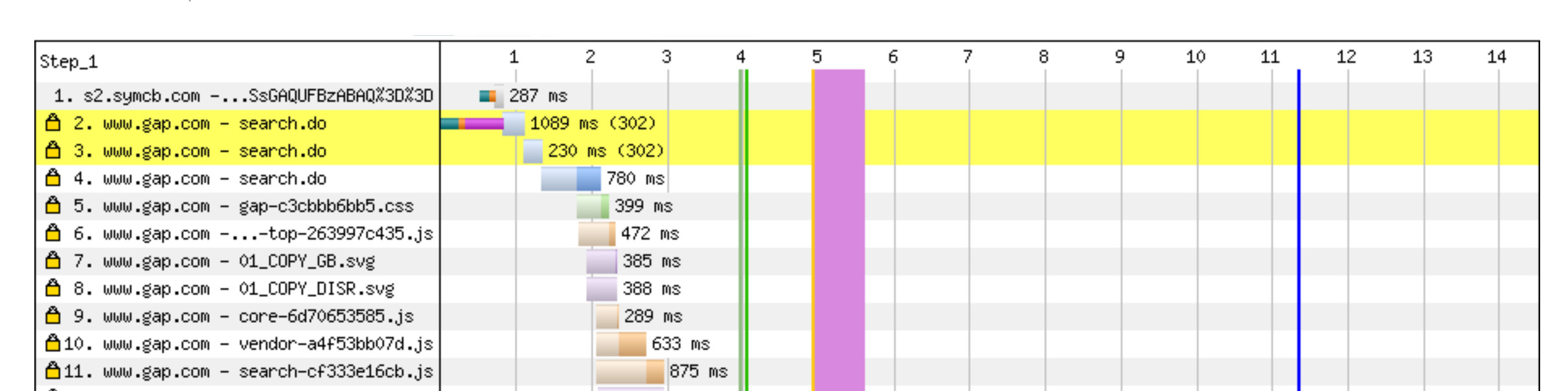

I recently visited The Gap’s website to search for new blue pants for my son since he has outgrown what he has. I was surprised to see that it took a long time for the search results to start rendering. Out of curiosity I ran a test on WebPageTest.org and saw that from San Francisco it was 4 seconds before it started rendering anything. For those of you are not aware, there are two mental rewards when a web page loads for visitors. One is when it starts rendering – some activity shows up on the next page “rewarding” them for the click. Then they are further rewarded when the page allows them to interact with it. The “rewards” were almost painful here.

Kissmetrics says that 40% of people will abandon a page that takes 3 seconds or more to load. Now some could say Gap customers are loyal and might put up with the performance because they are familiar with the brand and the quality to price ratio. But what if it were 20%? Even 10% is a serious loss of revenue. I’d also wager that the transactions that do happen are for shopping carts with fewer items in them because searching takes so long. This also translates to lower profits.

If you do understand and believe the relationship between performance and revenue, how do you go about getting that better performance? You’ve got several options, but not all of them are right or relevant for all digital properties. This is an area where you need to clearly understand what is impacting performance as well as what can be done about that impact. And if you also have quantifiable visibility into the impact on conversions from those changes you are light years ahead of your competition.

No matter which route you chose, the foundation lies in first setting up a performance based culture that understands and values the goals here. The earlier statistics from Amazon and Intuit should be enough for Step 1 of that process. Once you’ve Created a Benchmark and are working toward your performance (and revenue!) goals, how do you go about it?

The first way some firms go about improving performance is to throw equipment at the problem. Sure you can buy more servers that are faster, switch cloud platforms or pay for a higher Quality-of-service tier from your cloud provider. Most of this is provided your design allows for a distributed architecture. But if your SDC (Software Delivery Chain) is at all complex this rarely makes fiscal sense. It is instead a brute force move that often wastes money and loses out on a lot of potential revenue through that waste.

I’ve seen firms with a dozen logical tiers to their application. How are you sure you did the upgrades to the right tier(s)? I also know from firsthand experience that system load can indicate other things like code or SQL queries that need to be optimized. It is also possible for a system with low load to be the actual performance killing culprit. (More on solving that farther down . . . )

The second route was one I got to learn about extensively during my 4+ years at Compuware/Dynatrace. That is the path of learning about, deploying and leveraging Application Performance Management. The industry is very crowded and to a certain extent the term has gotten rather diluted. You’ll quickly learn that firms like AppDynamics, Dynatrace, LightStep, New Relic and quite a few others allow you to peer inside your architecture and see where the bottlenecks are. It might be code, database, internal network, file size or site complexity that is creating the problem. I’ve personally seen handled exceptions (as in “you can’t find this in your logs”) eat up 18% of the performance under load.

The third route was something I got to learn a lot about over a 2 year stretch at Fastly. That option is to leverage CDNs where it makes sense. We joke around that CDNs let you beat the speed of light. They do it by letting you distribute the content globally and put it closer to your end user. (If you don’t get the joke – network traffic is basically moving at the speed of light on fiber. Electrical currents are 1/100th of that, so network complexity or that “last mile” can be killers. Move the content or compute closer and the end user gets it much faster. Hence you “beat” the speed of light.)

Firms like Fastly, Cloudflare and Akamai let you do this. And of course (you knew this was coming) technology like Fastly lets you take it even farther and cache content that you can’t cache on other providers. Do you want to spend money on cloud compute or instead learn about CRUD and caching? It really is easy and can give you huge returns as well as better experiences for your customers. Your site will be more secure and dependable as well.

For the second and third routes I listed above, how do you know which one to go with? Simple top level average page performance numbers won’t tell you enough. You can certainly know that there is room for improvement and good potential to increase profits. But how do you improve that performance? There is a science to looking at the page performance. You can use tools like Webpagetest, Catchpoint or Blue Triangle to get a waterfall of the components that make up the page.

Blue Triangle does have an edge because it is Real User Data – not synthetic tests but the actual page loads your visitors are seeing. They also have the experience and technology to quantify exactly how the revenue is tied to the performance and recommend specific pages or paths to concentrate your efforts on. Even better they can also quantify the improvement revenue you’d get from a specific improvement in performance. And some of the APM solutions also provide RUM (Real User Monitoring) data of the true End User Experience. I am most familiar with Dynatrace’s User Experience Management, but am sure there are similar offerings from other APM players. (Using a different one for RUM waterfalls? Let me know!)

Based on the details you can then decide which of the following steps bring how much improvement:

- Simplify the page. This might be reducing images, optimizing those images, or removing extra analytics that are running. This last one is because 3rd party analytics and tracking tools can really hurt the performance. And with some CDNs you can get free real time logging of nearly all the traffic and process your logs rather than bog down the page with extra analytics. It is probably possible to drop the analytics JavaScript tags and instead parse your logs to create the same aggregated metrics. Of course you still need to understand the user journey, and where they are running into friction that is hurting your sales funnel. (Another spot where Blue Triangle should be considered.)

- Improve the actual compute power of the site. This might be by blindly deploying more (quantity and quality) infrastructure. But if you are really smart you’ll dive into APM first and get some vision into what is really going on.

- Look at APM to see where the bottlenecks are within your actual code or SQL. (Yes – you’ll even gain insight to the queries being run and how long they are taking. And this is often done via APM without touching your database tier in any way.)

- Move as much of the content as you can out to the edge with a CDN. Let someone else leverage economies of scale to deploy fast tuned networks, lots of RAM and SSDs to host your content and logic at the edge.

This does take some knowledge in web architecture to know what components of the page can be accelerated and how. Typically the content that doesn’t change (or is event driven) can be cached. Content that is truly dynamic that has to be kept in the page is often a task for APM to see what can be done to speed it up. And there is a whole black art to the order in which objects load and how changing that order impacts page rendering.

One area that I would strongly caution is around marketing and semantics. For both CDNs as well as APM many firms seem to list very similar bullet points on what they do. You’ll hear terms like “instant purge” or “instant configuration” where “instant” can have dramatically different meanings. There is a big difference on what you can cache if purging takes minutes versus milliseconds. Same goes with “minimal” or “negligible” performance impact of the agents on the APM side. So when you do make the decision on the route to go make sure you do your homework. Don’t shop purely by the marketing or the price point. Head to head trials with defined quantifiable metrics along with apples to apples comparisons make for the most educated decisions. Be positive you understand if the solution(s) will solve your problem and create positive income and a great ROI.

A forth way to improve performance (if relevant to your site) is to look at Instant Search. Firms like Algolia have a Search as a Service platform that make it easy to change your search to make it not only instant, but more relevant too. This in itself is almost a topic for an entire independent article. If I haven’t gotten to that yet – reach out for a demo to better understand what they do and how they work. Their platform can return search results on average in 50ms – fast enough to truly feel instant. And they have powerful logic where you can make those results more relevant to the user. Again – a topic for a whole other post!

So companies like The Gap would have three choices here. They could cache searches with a CDN like Fastly and automate purges when the catalog changes. They could leverage APM to find the performance bottlenecks and fix them. Lastly they could make their search experience like it is at Quicksilver by using a Search as a Service platform. (Click the Search button at the top and try something like “blue pants” – Algolia powers this site and the response time and faceting is exciting.)

One important point to mention is that when you make a site faster you do need to take more than just speed into account. With the Algolia example you would also be dramatically changing the user experience. Instant Search is a whole different experience in a positive way, but that experience is also influenced by the search results becoming much more relevant. Any change like this can change the digital experience and is a topic alone worthy of an entire article.

Not only is speed relevant, but the change itself could have an impact on the experience in a different way. One of Blue Triangle’s videos gives an excellent example of where the change made for better performance but hurt the effectiveness of the site as well as revenue. At first that seems hard to imagine, but there is another way to improve performance and that is to optimize images. One firm took the optimization too far, resulting in photos that either didn’t do the products justice or impacted perceptions of the site’s quality. With Blue Triangle they were actually able to quantify what image optimization actually led to the best conversion rate. I try to fathom the power of being able to measure the correlation between speed, image quality and conversions – it is impressive! Speed and image quality of course are inversely related. Higher quality (resolution) images load slower. But there is a sweet spot where human perception of the product balances with perception of the quality of the site – heavily influenced by the speed!

The last option that comes to mind is FEO – Front End Optimization. My experience here has been limited to a few customers that had mixed experiences with some of the solutions out there. Those solutions leveraged a proxy that would rewrite the page and alter the content real time, as well as do image optimization. If the site went through an architecture change, those changes had to be tested on the proxy prior to going live. You were basically letting someone else put your content through a black box to modify it. Because of that visibility was quite lacking. You never were certain that the rendering was consistent across multiple browsers and operating systems. There is a good chance I’m doing a disservice to firms that do FEO, so if you do have an experience (good or bad) please do share it with me!

Hopefully this article has given you evidence that web performance does directly impact revenue. Also that you have multiple options to fix that performance once you have created a performance culture that is tracking baselines and working to improve that performance. Yes, it does take some experience to know what specifically to fix and how. But there are firms out there that can help either fix the issue or correlate it to revenue. And if I’ve missed any other routes to improve performance, do let me know.